The foundation of numerous written languages and computational systems lies in the Latin alphabet and numeric system. Proficiency in deciphering the diverse coding methods used to represent Latin letters and numerals is essential for professionals in fields such as computer science, cryptography, and communications. This article endeavors to offer an exhaustive and intricate exploration of the encoding systems and representations associated with Latin characters and numbers. It will illuminate key concepts, including ASCII, Unicode, and various other encoding mechanisms.

The Marvels of the Latin Alphabet

The Latin alphabet, a linguistic gem with its roots intertwined in the Etruscan and Greek scripts, stands as a testament to the evolution of human communication. This system, originally employed by the ancient Romans, comprises 26 letters, each donning two distinct forms: the uppercase and the lowercase. The beauty of the Latin alphabet lies not only in its historical significance but also in its contemporary global relevance, necessitating the development of standardized encoding systems to ensure seamless integration across various languages and digital platforms.

1.1 ASCII Code Unveiled: A Digital Linguistic Marvel

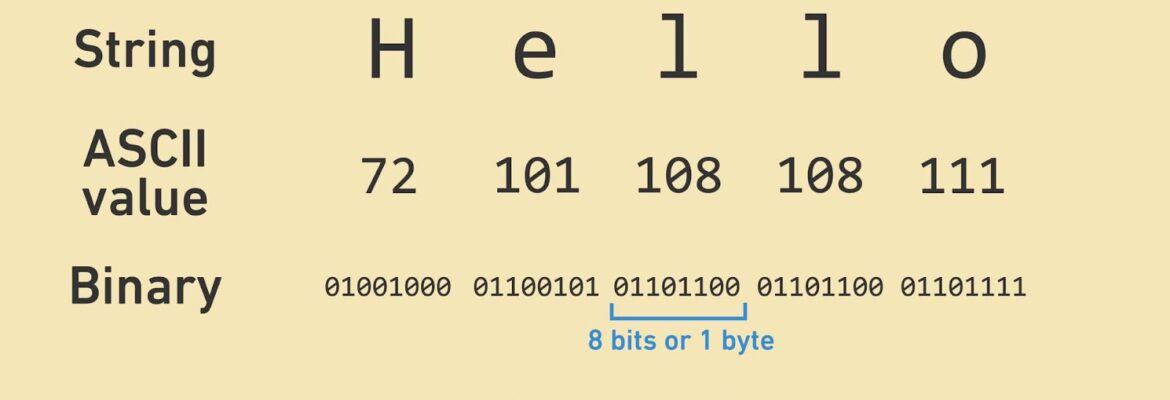

ASCII (American Standard Code for Information Interchange), often hailed as the pioneer of character encoding standards, is the technological backbone of modern computing’s ability to handle text. This ingenious system assigns a unique seven-bit binary number to each Latin letter, enabling computers to understand and manipulate textual data effortlessly. Dive into the ASCII universe, and you’ll find:

- Efficiency: ASCII’s concise seven-bit representations are efficient for storage and processing, making it a preferred choice for encoding text data;

- Universality: ASCII’s foundation is rooted in the Latin alphabet, yet it boasts a versatility that extends beyond. It includes control characters, numerals, and special symbols, making it a universal solution for text encoding;

- Compatibility: Nearly all modern programming languages and computer systems support ASCII, making it a cross-compatible choice for developers and programmers.

As a fascinating example, consider the capital letter ‘A,’ which ASCII elegantly represents as ‘1000001.’ Meanwhile, its lowercase counterpart, ‘a,’ transforms into ‘1100001.’ This encoding marvel simplifies the complex task of rendering and transmitting text in the digital realm.

1.2 Unicode: Bridging the Linguistic Chasm

In the grand tapestry of digital communication, Unicode emerges as a global diplomat, uniting diverse languages and scripts under a common umbrella. It transcends the limitations of ASCII by assigning a unique hexadecimal value to each character, not only within the Latin alphabet but also for characters and symbols spanning the world’s linguistic diversity. Delve into the world of Unicode, and you’ll uncover:

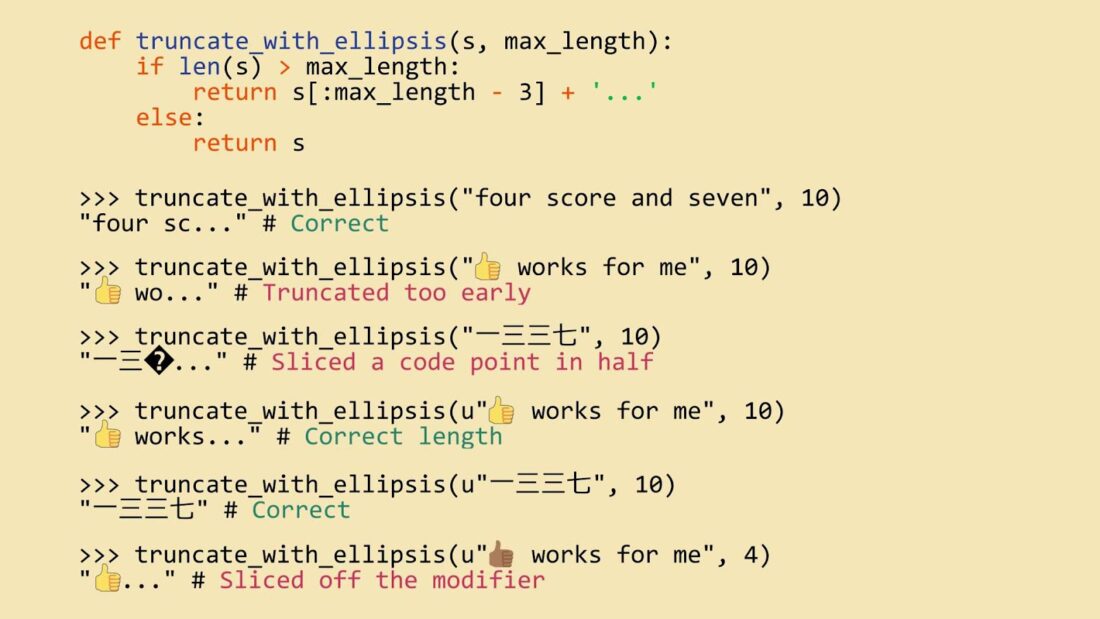

- Inclusivity: Unicode encompasses a staggering array of characters, spanning alphabets, ideograms, emojis, mathematical symbols, and more, providing a space for every language and symbol to coexist harmoniously;

- Interoperability: Whether you’re typing a text message on your smartphone or writing code on a computer, Unicode ensures that your characters will be displayed consistently, regardless of the platform, program, or language;

- Extensibility: Unicode continually evolves, accommodating newly recognized scripts and characters as the world of linguistics expands, preserving the cultural richness of global communication.

The Latin Numeric System

The Latin numeric system, more commonly recognized as Roman numerals, is a captivating and timeless method of representing values using a combination of letters from the Latin alphabet. This ingenious system adds a touch of history and mystique to numerical representation. As you delve into this numerical realm, you’ll discover the elegant simplicity and practicality of Roman numerals.

2.1 Modern Encoding: A Bridge to the Past

Roman numerals, although often associated with antiquity, still have a significant role in contemporary contexts such as clock faces and chapter numbering in books. However, the digital age predominantly relies on Arabic numerals for everyday calculations and communication. To bridge this historical gap, encoding systems like ASCII (American Standard Code for Information Interchange) and Unicode come into play.

- ASCII Code: ASCII assigns numerical codes to characters. For instance, the ASCII code for ‘1’ is ’49’ in hexadecimal notation;

- Unicode Advancement: Unicode takes a leap forward by accommodating complex representations of numbers. It not only includes standard Arabic numerals but also offers the ability to encode Latin (Roman) numerals alongside them. This versatility is invaluable for multilingual contexts and scientific notations.

2.2 Complex Representations: Unlocking the Multitude of Numeric Characters

Unicode, with its expansive character set, enables a richer tapestry of numeric characters used in various languages and scientific disciplines. Here’s how Unicode transforms the way we represent numbers:

- Multilingual Support: Unicode embraces a plethora of languages, allowing the representation of numerals from diverse linguistic backgrounds;

- Scientific Notations: From superscripts and subscripts to mathematical symbols, Unicode empowers researchers and scientists to represent numbers in intricate and precise ways;

- Universal Compatibility: Unicode ensures that these complex numeric representations can be used across different platforms and devices without loss of information or formatting issues.

Implementation and Usage

In the intricate web of modern technology, where data flows like a digital river, one fundamental aspect plays a pivotal role – character encoding. This unassuming yet crucial component has far-reaching implications in diverse fields, including computer science, linguistics, and data processing. Delving deeper into the realms of character encoding unveils its multifaceted significance in facilitating interoperability, ensuring consistency, and enabling secure communication. Let’s explore how character encoding is implemented and utilized across various domains.

3.1 Programming Languages: Harnessing the Power of Character Encoding

In the world of programming, character encoding is the cornerstone of text processing. It empowers developers to represent and manipulate text in a standardized and efficient manner. Different programming languages have recognized the paramount importance of character encoding, and as such, they offer built-in functions or libraries to handle this intricate task seamlessly. Here’s a closer look at how character encoding plays a vital role in programming:

Key Insights and Recommendations:

- Choose the Right Encoding: Selecting the appropriate character encoding, such as UTF-8 (Unicode Transformation Format), is crucial to ensure compatibility with various languages and scripts. UTF-8 can represent virtually all characters in the Unicode standard;

- Handle Encoding Errors Gracefully: Robust error handling is essential when dealing with character encoding. Be prepared to handle situations where text cannot be decoded correctly due to encoding mismatches;

- Stay Updated: Character encoding standards evolve over time. Keep abreast of the latest developments to ensure your software remains compatible with contemporary data;

- Optimize Encoding and Decoding Operations: Proficiently managing character encoding can significantly improve the performance of your software. Optimize encoding and decoding operations to reduce computational overhead.

3.2 Cryptography: Encoding for Data Security

In the intriguing world of cryptography, the importance of encoding Latin letters and numerals cannot be overstated. Character encoding forms the bedrock upon which secure communication and data protection are built. Cryptographers employ various encoding techniques to transform plain text into cipher text, making it unreadable to unauthorized entities. Here’s a glimpse into how character encoding is intertwined with cryptography:

Key Insights and Recommendations:

- Binary Transformation: Cryptographers often rely on encoding characters into binary representations. Each character is mapped to a unique binary sequence, enhancing security by obfuscating the original message;

- Hexadecimal Encoding: Hexadecimal encoding is another common technique. It represents characters using a base-16 numbering system, which is particularly useful when working with binary data and byte-level manipulation;

- Cipher Suites: Modern cryptographic protocols, like TLS (Transport Layer Security), rely on specific cipher suites that include character encoding as part of the encryption process. Ensure your cryptographic implementations adhere to industry-standard cipher suites for robust security;

- Character Substitution: In some cryptographic algorithms, characters are substituted with other characters or symbols based on predefined rules or keys. Understanding the encoding scheme is essential for both encryption and decryption.

Conclusion

The Latin alphabet and numeric system stand as fundamental pillars within contemporary channels of communication and computational frameworks. A profound grasp of their multifaceted encoding and representation structures, exemplified by entities like ASCII and Unicode, proves absolutely vital across a multitude of fields, spanning from the realms of computer science, cryptography, to the intricacies of linguistics. This all-encompassing handbook aspires to shed light upon the intricacies of Latin letter and numeral encoding, providing invaluable perspectives on their integration and utilization within the ever-evolving landscape of modern technology.